Web scraping projects using python | python projects for beginners practice

One of the most hyper things in today's web world is 'web scraping'. What is web scraping? In short, it is a tool to analyze site data. Python is an uprising in all programming and web sector, especially the python language is used to analyze data in a big sector like machine learning, object detection, web scraping, and so on.

Why do we select python language for web scraping?

python is easy to implement and more readable and understandable than other languages. One of the reasons we select python for web scraping is that there is a bunch of libraries is available in python language to analyze a web. (Notable: BeaultifulSoup, urllib, socket, and so on).

In this post, the School of Beginners wants to introduce some web scraping cool python code that will help you to make awesome and cool projects. This post is the beginning of web scraping projects using python language. The object of this web scraping python post is to analyze a website or a URL.

Fetching an URL, get the host address with the port number

Steps to "Fetching an URL, get the host address with the port number"

1. import the required package. The 'socket' library of python will be best to work with port.

2. we will print the required time to fetch the URL and find the host and post address. For this, we will use the 'time' library from python's built-in functions.

3. take the input as 'URL' (address of a domain name).

4. Now the procedure is easy as we have a built-in socket library. We will use the required function of the socket library. Get the Host IP address by using 'gethostbyname()' functions.

5. Then, check all the ports iteratively and find the open port by checking the connection of all PORT addresses of that URL. Print them all.

6. Find the required time by subtracting the present time of the program from the start time.

Python code for "Fetching an URL, get the host address with the port number"

from socket import *

import time

# stroing the initial time of the program start

startTime = time.time()

if __name__ == '__main__':

# Only web page name 'quotes.toscrape.com', don't include 'https://' as the input

target = input('Enter the url to be analyzed: ')

#getting the ip address

Host_IP_address = gethostbyname(target)

print('The host IP address: ', Host_IP_address)

#print the host address

for i in range(50, 500):

socket_fetching = socket(AF_INET, SOCK_STREAM)

# socket fetching to checking the ports

connection_0_1 = socket_fetching.connect_ex((Host_IP_address, i))

#checking open port

if (connection_0_1 == 0):

print('Port %d: OPEN' % (i,))

socket_fetching.close()

print('Total time took to fetching:', time.time() - startTime)Input:

Enter the url to be analyzed: quotes.toscrape.com

Output:

Starting scan on host: 142.0.203.196

Port 80: OPEN

Port 443: OPEN

Time took: 180.4532454

How this mini-project will help you?

1. If you want to know the host address find out the hosting server.

2. Port analysis of a website or its server to attack simultaneously.

3. Time is taken to load a web server or web page.

Get the status of URL with emoji

steps to "Get the status of an URL with emoji"

1. Install the required package (pip install emoji). This emoji package will help to show the output with the emoji symbol.

2. Import all required packages.

3. Take the input as a URL address.

4. To crawl the URL and open it, use the 'urlopen' function from the 'urllib.request'.

5. Now, print the status with the emoji symbol. The status return from the URL is divided into three-part. 'HTTPSuccess', 'HTTPError', and 'URLError'. The 'HTTPSuccess' is by default set as successfully return status from the URL.

Case 'HTTPSuccess': In case of request success, prints success status code and thumbs_up emoji.

Case 'HTTPError': In case of request failure from the requested URL, print HTTP error status code, and thumbs_down emoji from the emoji library.

Case 'URLError': In case of a bad URL or connection failure to the requested URL, print the error and show the thumbs_down emoji from the emoji library.

Python code for "Get the status of an URL with emoji"

from urllib.request import urlopen

from urllib.error import URLError, HTTPError

import emoji as em

#Taking input url ex: 'https://quotes.toscrape.com '

requestedURL = input("Enter the URL to be analyzed: ")

#Gets the response from URL and prints the status code and emoji with message accordingly

try:

response = urlopen(requestedURL)

# Fetched success, prints success status code and thumbs_up emoji

print('Status code : ' + str(response.code) + ' ' + em.emojize(':thumbs_up:'))

print('Message : ' + 'Request succeeded. Request returned message - ' + response.reason)

except HTTPError as e:

# Fetched request failure, prints HTTP error status code and thumbs_down emoji

print('Status : ' + str(e.code) + ' ' + em.emojize(':thumbs_down:'))

print('Message : Request failed. Request returned reason - ' + e.reason)

except URLError as e:

# Fetch connection failure, prints the Error and thumbs_down emoji

print('Status :', str(e.reason).split(']')[0].replace('[','') + ' ' + em.emojize(':thumbs_down:'))

print('Message : '+ str(e.reason).split(']')[1])Input:

Enter the URL to be analyzed: https://quotes.toscrape.com

please, use space after the URL input or it will redirect to the web.

Output:

Status code: 200 👍

Message: Request succeeded. The request returned message - OK

How this mini-project will help you?

1. Before attacking any server or web page, you have to check the status of this web server. The server response will tell you to determine the next steps.

2. How to use the emoji package.

3. Show any server or web page status with emoji on a website.

4. you can make a tool to print a website status.

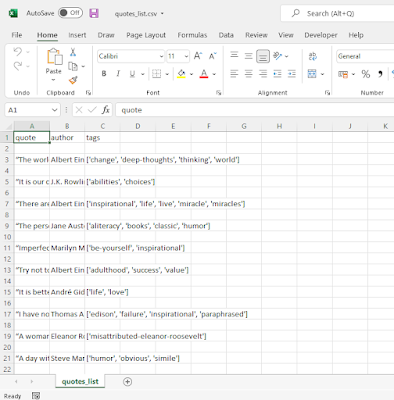

Quotes web scraping using the python language

steps to "Quotes web scraping using the python language"

1. Install the required package 'pip install beautifulsoup4'.

2. Import all required libraries.

3. Take the URL as the input.

4. Check the HTML file from the requested URL and parse the HTML using the 'html.parser'

5. We will save all parsing results from the URL as a 'CSV' file and we will use the 'CSV' package for that.

6. Set the fields name for the 'CSV' file and write the fields as the header using 'writeheader()' function.

7. Now continuously check the parsed HTML file of the requested URL until the HTML file has been completely scanned by the parser. Extract all the text parts of the quote, author, and tags from the HTML file.

8. After the successful completion, write each fetched row in the CSV file related to the field's name.

Python code for "Quotes web scraping using the python language"

from bs4 import BeautifulSoup

import requests

import csv

# URL of the website

# change the address as you want

requestedURL = 'https://quotes.toscrape.com'

# Check the HTML file from the requested URL and parse the HTML using the 'html.parser'

html = requests.get(requestedURL)

B_soup = BeautifulSoup(html.text, 'html.parser')

# Tries to open the csv file

try:

csv_file = open('quotes_list.csv', 'w')

fieldnames = ['quote', 'author', 'tags']

dictwriter = csv.DictWriter(csv_file, fieldnames=fieldnames)

# Writes the headers

dictwriter.writeheader()

# While next button is found in the page the loop runs

while True:

# Run loops until the page completely scanned

for quotes in B_soup.findAll('div', {'class': 'quote'}):

# Get the text part of the requested fields

text = quotes.find('span', {'class': 'text'}).text

author = quotes.find('small', {'class': 'author'}).text

tags = []

for tag in quotes.findAll('a', {'class': 'tag'}):

tags.append(tag.text)

# Writes the fetched quotes,authors and tags in a csv file

dictwriter.writerow({'quote': text, 'author': author, 'tags': tags})

# Scanning the next pages

next = B_soup.find('li', {'class': 'next'})

if not next:

break

# parses all attributes from the html file and checking also the next pages

html = requests.get(requestedURL + next.a.attrs['href'])

B_soup = BeautifulSoup(html.text, 'html.parser')

except:

print('Unknown Error and Not defined!!!')

finally:

csv_file.close()Output:

A CSV file

How this mini-project will help you?

1. You learn the uses of the Beautiful Soup package of python.

2. Get any website any information in proper CSV format in a minute.

3. You can make a tool to clone a website with this mini project. All you have to do is, analyze the website 'class', 'div', 'id', 'span', and other attributes.

Why did we use 'try' and 'except' in those python programs?

Well, you are fetching URLs from other sources. There will be lots of errors. So, you have to handle this and with your code also. The 'try' and 'except' keywords in python will let you handle the errors in a block and run your program smoothly. We put all fetching and parsing works in the 'try' block and if there are errors, then we send them in the 'except' block.

A special thanks to Github